Building a Robust pSEO Data Pipeline

A practical guide to building data pipelines that scale from 100 to 100,000 pages without breaking.

Overview

Your programmatic SEO site is only as good as your data. This guide covers how to build a data pipeline that's reliable, scalable, and maintainable.

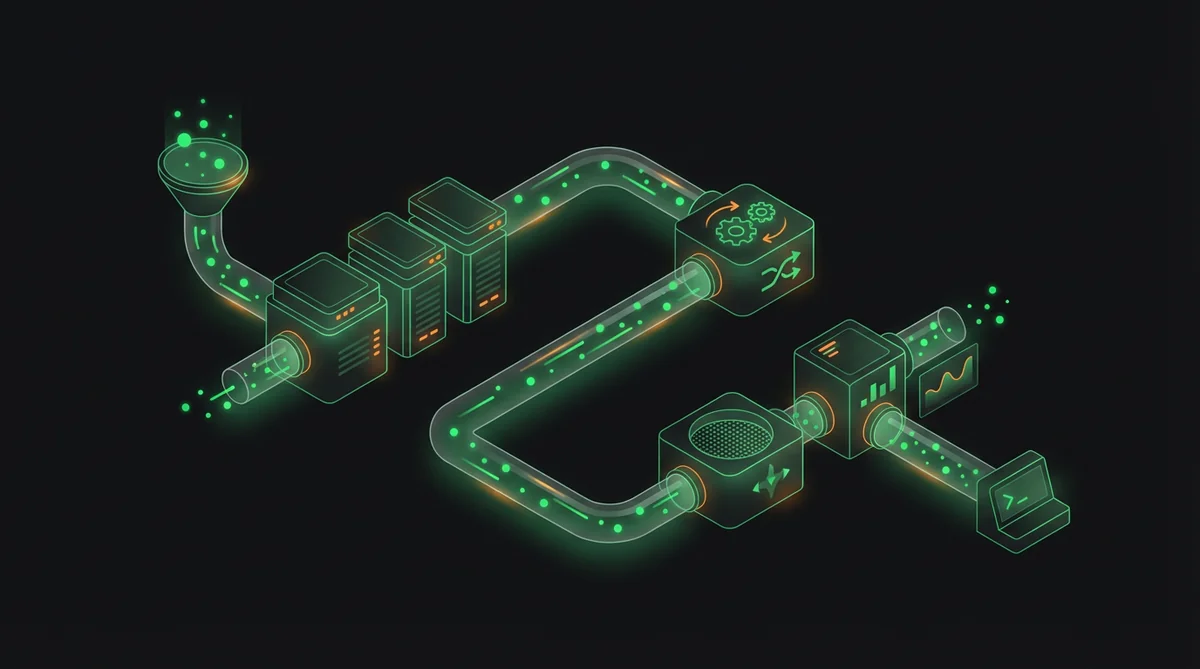

Pipeline Architecture

Core Components

- Data Sources: APIs, scrapers, manual inputs

- Ingestion Layer: Collection and normalization

- Storage: Database with proper indexing

- Processing: Enrichment, validation, deduplication

- Output: API for your frontend/SSG

Recommended Architecture

Sources → Ingestion (Airflow/Cron) → PostgreSQL → Validation → API → SSG BuildStep 1: Data Ingestion

API Ingestion

- Use rate limiting to respect API limits

- Implement exponential backoff for failures

- Log all requests for debugging

- Cache responses to reduce API calls

Web Scraping

- Respect robots.txt and rate limits

- Use rotating proxies for scale

- Handle dynamic content (Playwright/Puppeteer)

- Monitor for structure changes

Step 2: Data Storage

Schema Design

- Normalize for consistency

- Index columns used in queries

- Use JSONB for flexible attributes

- Track updated_at for freshness

Example Schema

CREATE TABLE entities (

id SERIAL PRIMARY KEY,

slug VARCHAR(255) UNIQUE NOT NULL,

name VARCHAR(255) NOT NULL,

category_id INTEGER REFERENCES categories(id),

attributes JSONB,

status VARCHAR(50) DEFAULT 'draft',

created_at TIMESTAMP DEFAULT NOW(),

updated_at TIMESTAMP DEFAULT NOW()

);Step 3: Data Validation

Validation Rules

- Completeness: Required fields present

- Format: URLs valid, dates parseable

- Business logic: Prices positive, ratings 1-5

- Uniqueness: No duplicate entities

Quality Monitoring

- Set up alerts for validation failure spikes

- Track data freshness per source

- Monitor for duplicate content

- Regular audits of sample pages

Step 4: Automation

Scheduling

- High-frequency data: Hourly updates

- Moderate: Daily updates

- Static data: Weekly/monthly

Tools

- Simple: Cron jobs

- Medium: GitHub Actions

- Complex: Apache Airflow, Dagster

Step 5: Monitoring

Key Metrics

- Pipeline success rate

- Data freshness (time since last update)

- Entity coverage (% with complete data)

- Error rates by source